Design an SPF

Hover over underlined words throughout this page for examples from various local school performance frameworks.

Stage One: Understand the Landscape, Set Goals and Priorities

During stage one, system leaders should decide whether or not they need

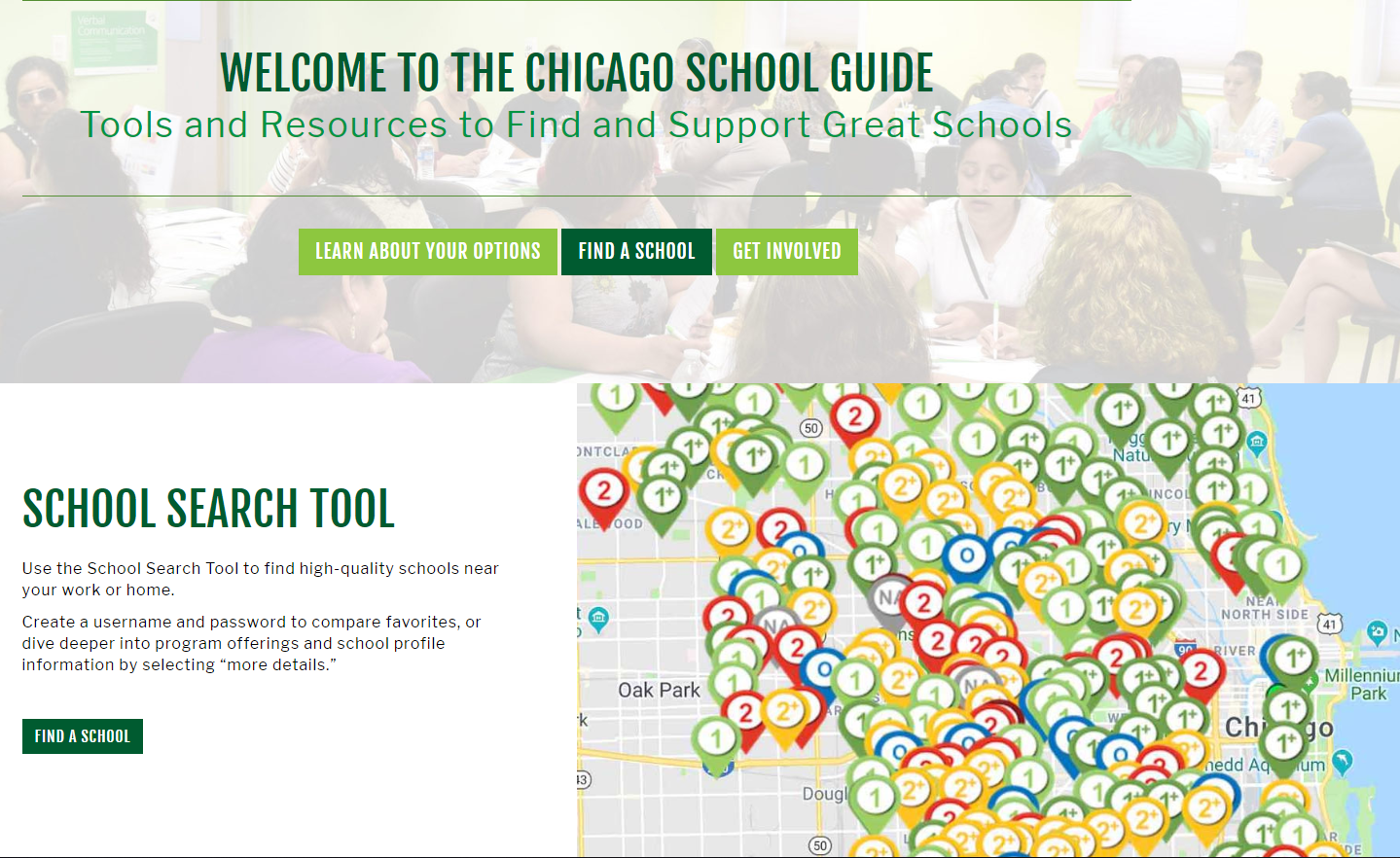

In Chicago, legislation that brought about mayoral control over schools also mandated the creation of a local SPF, which the city uses to determine a school's level of autonomy under the oversight of a local school council.

an SPF, and what they will use their SPF for, in collaboration

In Chicago, legislation that brought about mayoral control over schools also mandated the creation of a local SPF, which the city uses to determine a school's level of autonomy under the oversight of a local school council.

an SPF, and what they will use their SPF for, in collaboration

In Denver, the school district has recently convened a working group of educators, school leaders, parents, and other stakeholders to reimagine the SPF.

and consultation with other stakeholders, such as families, educators, and school leaders. Developing and implementing a high-quality SPF requires significant investment of time and resources, and local leaders should be certain that an SPF is necessary.

In Denver, the school district has recently convened a working group of educators, school leaders, parents, and other stakeholders to reimagine the SPF.

and consultation with other stakeholders, such as families, educators, and school leaders. Developing and implementing a high-quality SPF requires significant investment of time and resources, and local leaders should be certain that an SPF is necessary.

In D.C., leaders saw a need for a common tool across schools to guide charter authorizer decisions and inform parents as the charter sector grew.

In D.C., leaders saw a need for a common tool across schools to guide charter authorizer decisions and inform parents as the charter sector grew.

One of the first steps should be to map currently available data systems and resources available at the local state level, and then decide what an SPF will add to this equation. What value will it provide? Ultimately, an SPF should add to existing resources and clarify, not confuse, stakeholders’ understanding of school performance.

“Ultimately, an SPF should add to existing resources and clarify, not confuse, stakeholders’ understanding of school performance.”

One consideration in many communities will be the presence and role of a state school rating. Under the Every Student Succeeds Act (ESSA), most states have created school ratings for the purposes of federal accountability. In a state with an existing rating or report card, adapting

In New Orleans, leaders of NOLA Public Schools use state performance scores as a local school performance framework to guide decisions. Leaders previously considered creating their own SPF, but feedback from community members dissuaded them. Instead, the school district has been able to adapt the state SPF to local needs.

the state accountability ratings might serve some goals well and could streamline the process of SPF creation. However, locally developed SPFs have the opportunity to provide a more nuanced picture

In New Orleans, leaders of NOLA Public Schools use state performance scores as a local school performance framework to guide decisions. Leaders previously considered creating their own SPF, but feedback from community members dissuaded them. Instead, the school district has been able to adapt the state SPF to local needs.

the state accountability ratings might serve some goals well and could streamline the process of SPF creation. However, locally developed SPFs have the opportunity to provide a more nuanced picture

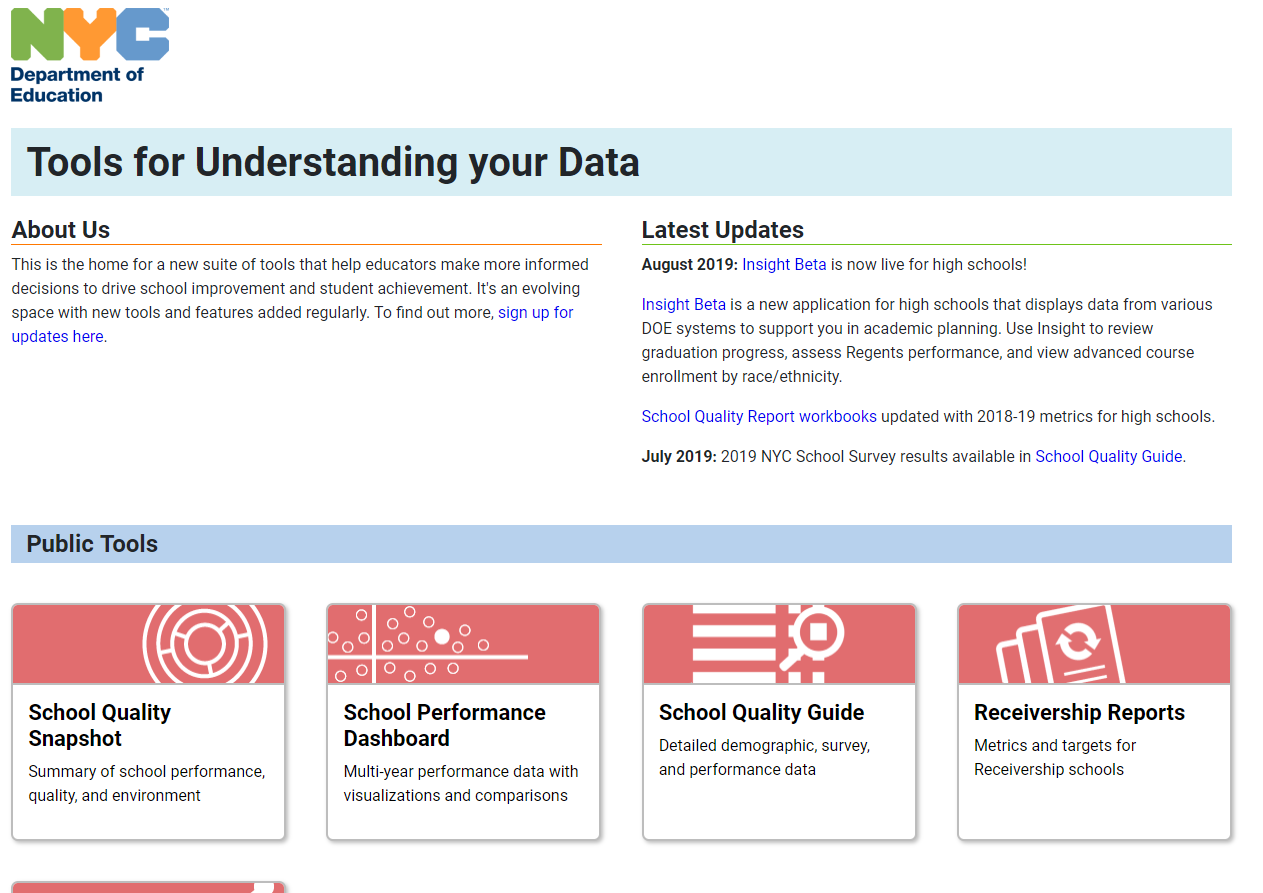

In New York City, leaders reorganized their SPF to align with their "Framework for Great Schools" so that school quality reports would reflect the district's revised theory of action for school improvement.

of school performance that reflects local goals and community priorities. Local leaders also have greater on-the-ground responsibility and decision-making power than state leaders.

In New York City, leaders reorganized their SPF to align with their "Framework for Great Schools" so that school quality reports would reflect the district's revised theory of action for school improvement.

of school performance that reflects local goals and community priorities. Local leaders also have greater on-the-ground responsibility and decision-making power than state leaders.

System leaders should prioritize and discuss use cases at the outset of the process, and discuss how an SPF will support system goals.

Once the decision to create or revise an SPF is set, design and implementation will take at least one school year or more. System leaders should set realistic benchmarks and deadlines to maintain momentum.

Questions for local leaders to consider:

- Why is an SPF necessary now? What will it add to our school system?

- What use cases do we envision for the SPF, and how will we prioritize them?

- Do we have the staff capacity, resources, and expertise to design, implement, and communicate an SPF with a high degree of stakeholder engagement?

- Do we have buy-in (or the potential for buy-in) across stakeholders?

- Do we have sufficient data to create a reliable and high-quality SPF?

- What will be the role of a state rating in our SPF, and how will our SPF relate to other existing resources and systems?

Stage 2: Design and Decision Making

During stage two, system leaders should decide on key design features of the SPF including metrics and their weights.

All the SPFs we looked at included annual academic metrics, like test performance, test score growth, and graduation rates. Four of the five SPFs also included other metrics like attendance, school climate, survey results, or post-secondary results.

To be effective, district leaders will need to differentiate SPF reports and resources for various audiences, and test the SPF with stakeholders to determine if the SPF works as intended and/or if there are unintended consequences that need addressing.

All the SPFs we looked at included annual academic metrics, like test performance, test score growth, and graduation rates. Four of the five SPFs also included other metrics like attendance, school climate, survey results, or post-secondary results.

To be effective, district leaders will need to differentiate SPF reports and resources for various audiences, and test the SPF with stakeholders to determine if the SPF works as intended and/or if there are unintended consequences that need addressing.

One of the most important steps is to determine what metrics to include and if those metrics align with system goals. A logical and effective SPF should clearly link up with long-term goals for students across schools. For example, if system leaders want to emphasize academic growth

Chicago system leaders wanted to emphasize academic growth, so they adopted an assessment in grades 3-8, the NWEA MAP, which measures growth and performance across grade levels. A substantial proportion of Chicago's elementary/middle school ratings are based on student growth rates and performance among priority student subgroups.

, then growth should be included as a measure and given substantial weight in the system. School leaders who want to emphasize additional metrics, like academic gaps between subgroups or school climate and culture, should include those metrics

Chicago system leaders wanted to emphasize academic growth, so they adopted an assessment in grades 3-8, the NWEA MAP, which measures growth and performance across grade levels. A substantial proportion of Chicago's elementary/middle school ratings are based on student growth rates and performance among priority student subgroups.

, then growth should be included as a measure and given substantial weight in the system. School leaders who want to emphasize additional metrics, like academic gaps between subgroups or school climate and culture, should include those metrics

In New York City, the SPF includes measures drawn from student surveys and from periodic on-site reviews of the school. However, these reviews are not conducted annually in every school, so data may be outdated.

in an SPF.

In New York City, the SPF includes measures drawn from student surveys and from periodic on-site reviews of the school. However, these reviews are not conducted annually in every school, so data may be outdated.

in an SPF.

“A logical and effective SPF should clearly link up with long-term goals for students across schools.”

While there is no perfect number of metrics

Denver and New York city had a high number of metrics in their SPFs. For example, New York includes 20 metrics in its student achievement sub-rating, while Denver uses more than 10 metrics to rate just student growth in high school. New Orleans had the fewest metrics, with only three factors influencing elementary school ratings.

in an SPF, the right choice will depend on the district’s definition of success and which use cases district leaders want to serve. One important trade-off to consider is that including too many metrics can create confusion around what is most important, while too few metrics can create a blunt definition of school success. Debates among stakeholders about which metrics to choose, and how many, can be the most fraught and drawn-out moments of an SPF creation process. Several districts reported difficulties at this stage of the process. But grounding in clear priorities for uses can help guide metric decisions productively.

Denver and New York city had a high number of metrics in their SPFs. For example, New York includes 20 metrics in its student achievement sub-rating, while Denver uses more than 10 metrics to rate just student growth in high school. New Orleans had the fewest metrics, with only three factors influencing elementary school ratings.

in an SPF, the right choice will depend on the district’s definition of success and which use cases district leaders want to serve. One important trade-off to consider is that including too many metrics can create confusion around what is most important, while too few metrics can create a blunt definition of school success. Debates among stakeholders about which metrics to choose, and how many, can be the most fraught and drawn-out moments of an SPF creation process. Several districts reported difficulties at this stage of the process. But grounding in clear priorities for uses can help guide metric decisions productively.

An important consideration for district leaders is whether and how to include a summative score in the SPF. On one hand, parents usually find a summative rating helpful in using an SPF, especially for school choice decisions, and a summative rating can

bring clarity

One piece of this clarity is designing and labeling the ratings themselves. For example, Chicago schools are given a rating of 1+, 1, 2+, 2, or 3, rather than using a 1-5 scale, or explanatory labels.

to a variety of system-and school-level decisions. However, some local leaders and stakeholders believe that summative ratings can be misleading and can encourage a narrow view of school quality. Ultimately, there is no one right answer,

One piece of this clarity is designing and labeling the ratings themselves. For example, Chicago schools are given a rating of 1+, 1, 2+, 2, or 3, rather than using a 1-5 scale, or explanatory labels.

to a variety of system-and school-level decisions. However, some local leaders and stakeholders believe that summative ratings can be misleading and can encourage a narrow view of school quality. Ultimately, there is no one right answer,

Of the five SPFs we examined closely, only one, New York City, omitted a summative rating. All others had a three to five point rating system of some kind.

and this decision should be informed by SPF values, goals, and implementation decisions.

Of the five SPFs we examined closely, only one, New York City, omitted a summative rating. All others had a three to five point rating system of some kind.

and this decision should be informed by SPF values, goals, and implementation decisions.

Throughout the design stage, district leaders should test SPF designs with various user groups and revise the SPF based on their input. District leaders should consider creating different ways of viewing SPF results aligned to the needs of different audiences. This does not mean creating parallel SPF ratings, just prioritizing and displaying information differently (e.g., nuanced, detailed reports for school leaders and/or high-level summations for district leaders and parents

See, for example, different versions of NYC's school quality reports

). School systems can easily get bogged down in this stage of the process, negotiating the pros and cons of different metric possibilities and weighting methods. That is one of the reasons that setting goals and prioritizing use cases at the outset of the process can bring clarity.

See, for example, different versions of NYC's school quality reports

). School systems can easily get bogged down in this stage of the process, negotiating the pros and cons of different metric possibilities and weighting methods. That is one of the reasons that setting goals and prioritizing use cases at the outset of the process can bring clarity.

Questions for local leaders to consider:

- What metrics and how many should we include in our SPF?

- Do our metrics align with our intended goals?

- Are summative ratings appropriate for our system? If so, how should summative scores be assigned?

- Did we engage a variety of stakeholders (e.g., parents, community leaders, advocacy organizations) in our design process?

- Did we design differentiated reports or resources for different audiences?

- Did we test our SPF with real-world system data before implementation?

Stage Three: Implementation, Evaluation, and Ongoing Sustainability

During stage three, district leaders should focus on putting

policies and procedures

Chicago and D.C. each publish comprehensive manual explaining how their ratings are calculated and what the ratings mean for decisions like charter school authorization or school intervention.

in place to prepare for implementation. These policies and procedures should make clear to stakeholders if and how the SPF will be reviewed and amended over time.

Chicago and D.C. each publish comprehensive manual explaining how their ratings are calculated and what the ratings mean for decisions like charter school authorization or school intervention.

in place to prepare for implementation. These policies and procedures should make clear to stakeholders if and how the SPF will be reviewed and amended over time.

An important step for district leaders is to capture all the SPF policies in clear and accessible documentation. This documentation should capture the goals of the SPF; the metrics, weights, and targets; the actions district leaders will take based on SPF performance; and SPF methodology.

To ensure a durable SPF, district leaders must focus on the SPF's stability, durability, and improvement over time. As seen in the local SPF examples, SPFs can change dramatically with a change in system leadership;

After a mayoral transition and a change in chancellors, New York City revamped its SPF and eliminated summative ratings from the system.

however, if an SPF is well established and well-liked by a variety of community members, it can

withstand

After a mayoral transition and a change in chancellors, New York City revamped its SPF and eliminated summative ratings from the system.

however, if an SPF is well established and well-liked by a variety of community members, it can

withstand

When New Orleans schools transitioned from the control of the state-run Recovery School District to the locally elected school board, the school board opted to retain and adapt the state ratings as a core part of the system in large part because they were well known to parents and other key stakeholders.

various kinds of transition.

Stability

When New Orleans schools transitioned from the control of the state-run Recovery School District to the locally elected school board, the school board opted to retain and adapt the state ratings as a core part of the system in large part because they were well known to parents and other key stakeholders.

various kinds of transition.

Stability

Leaders in Chicago credit the stability of their SPF since 2013 with creating strong credibility among different stakeholders and user groups, especially school principals. In contrast, Denver's changes in methods and additional metrics have impacted the credibility of the system with key stakeholders.

is also key to building credibility. If the metrics in an SPF are changing constantly, school leaders, educators, and the public will likely lose faith in the SPF as a reliable tool, and it will be harder to track progress accurately over time.

Leaders in Chicago credit the stability of their SPF since 2013 with creating strong credibility among different stakeholders and user groups, especially school principals. In contrast, Denver's changes in methods and additional metrics have impacted the credibility of the system with key stakeholders.

is also key to building credibility. If the metrics in an SPF are changing constantly, school leaders, educators, and the public will likely lose faith in the SPF as a reliable tool, and it will be harder to track progress accurately over time.

But this does not mean that district leaders should not engage in regular evaluation cycles. There should be consistent cycles of analysis and evaluation after the SPF goes into effect to ensure it is functioning as intended and being used as anticipated, and to identify areas for improvement or additional user supports. Before implementation, district leaders should determine and then communicate a process for regular review and improvement of the SPF.

Questions for local leaders to consider:

- Did implementation roll out smoothly and is the SPF being used for its intended purposes by relevant stakeholders? Is the SPF serving the purposes for which it was designed?

- Did the initial implementation and evaluation reveal any technical problems or unintended impacts of the SPF?

- What is our timeline to review and revise the SPF at regular intervals?

- Have we documented the policies and details behind the SPF in clear and accessible documentation?